Member-only story

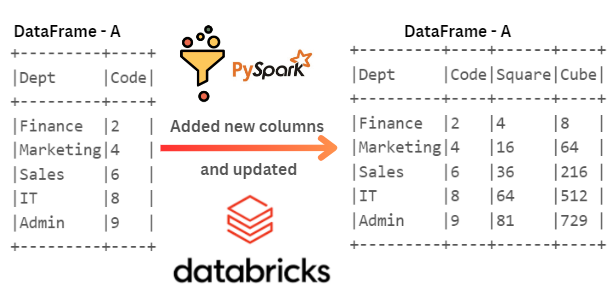

PySpark— Update multiple columns in a dataframe

Working as a PySpark developer, data engineer, data analyst, or data scientist for any organisation requires you to be familiar with dataframes because data manipulation is the act of transforming, cleansing, and organising raw data into a format that can be used for analysis and decision making.

Note: We are using Databricks environment to articulate this example.

We understand, we can add a column to a dataframe and update its values to the values returned from a function or other dataframe column’s values. In PySpark, you can update multiple columns in a DataFrame using the withColumn method along with the col function from the pyspark.sql.functions module as given below -

## importing sparksession from

## pyspark.sql module

from pyspark.sql import SparkSession

from pyspark.sql.functions import col

# Create a Spark session and giving an app name

spark = SparkSession.builder.appName("UpdateMutliColumns").getOrCreate()When you see data in a list in PySpark, it signifies you have a collection of data in a PySpark driver.